.png)

In 2025, more people than ever are trying to make money on YouTube, and for good reason. Stories of creators earning thousands from faceless channels have flooded TikTok, Instagram, and YouTube itself. The rise of AI tools like ChatGPT, text-to-speech software, and stock footage sites made it seem easy: upload five videos a day, stay anonymous, and build passive income.

But that approach is no longer safe.

On July 15, 2025, YouTube introduced a major policy overhaul targeting low-effort, AI-generated content. This “inauthentic content” rule demonetizes videos that are mass-produced, repetitive, or lack original insight, especially those relying on AI narration without human context.

Creators using recycled scripts, robotic voices, or batch uploads now risk losing monetization, strikes, or even removal from the YouTube Partner Program.

If you’re starting out or following popular AI “blueprint” tutorials, this guide will help you understand the new rules, how YouTube enforces them, and how to create ethical, monetizable content that stays compliant using smart AI tools like Knolli.

Key shifts:

If you're uploading content that relies heavily on:

You’re at high risk of demonetization. Even YouTube Short channels are being flagged.

The following infographic highlights formats YouTube is now actively flagging.

.png)

The channel StoriezTold was flagged for repetitive AI-narrated content about animals. Despite different video titles, all followed the same voiceover + slideshow format. This pattern triggered YouTube's inauthentic content filter.

YouTube defines it as:

A channel uploads 100 Top-10 videos using the same script template and AI voice: demonetized.

See More: 12 AI Content Creation Tools for YouTubers, Podcasters & Bloggers [2025]

YouTube’s July 2025 policy isn’t theoretical, enforcement is already happening. Here are high-profile cases that show how quickly creators can get demonetized or banned:

A YouTube channel called True Crime Case Files, with over 83,000 subscribers, was removed entirely after posting 150+ videos narrating AI-generated murder stories as fact. One video, falsely describing a crime in Colorado, led to public confusion and local media inquiries.

NBC News uncovered a coordinated network of channels spreading AI-generated fake news about Black celebrities, including Diddy, Denzel Washington, and Steve Harvey, using deepfake images and robotic narration. Several of these channels were demonetized or terminated.

YouTube began striking or deleting channels that used deepfake voices of murdered children to narrate fictional crime stories.

The platform updated its harassment policies in January 2024 specifically to address this disturbing trend.

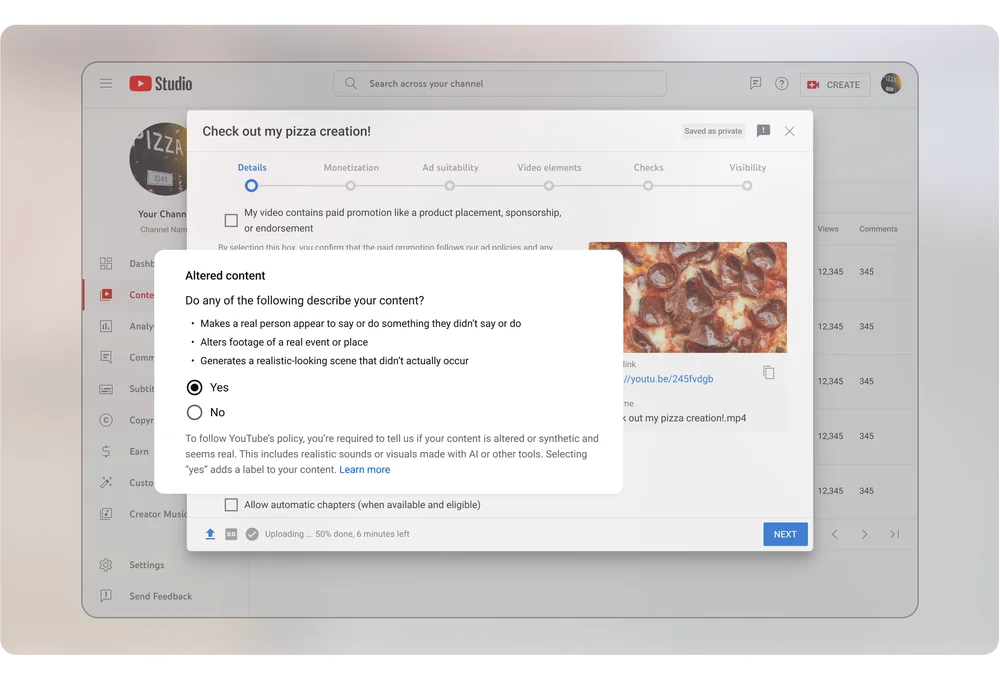

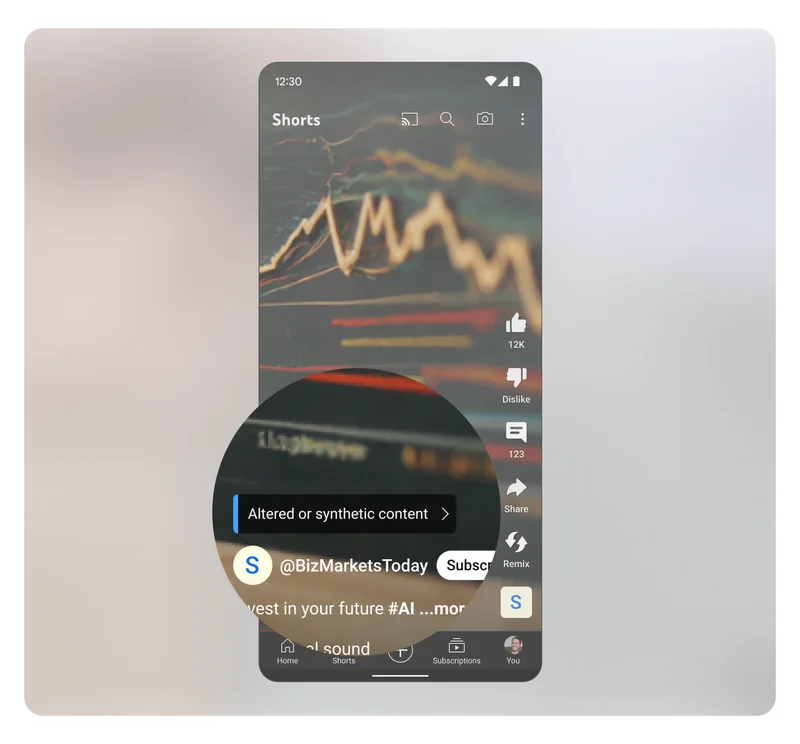

Every upload now asks:

"Does this video contain realistic, altered, or synthetic content?"

Source: YouTube Blog

Source: YouTube Blog

Failing to toggle “Yes” when required can lead to:

Disclosure is mandatory if you use:

YouTube uses:

See More: AI in Content Creation: Challenges & How to Overcome Them

Safe Scenarios (You’re Good If...)

Example: Youtuber Matt Par utilizes AI tools for scriptwriting but adds personal insights and commentary to enhance the content's value and engagement.

Why It Works: By infusing personal analysis and commentary, creators ensure their content offers unique perspectives, aligning with YouTube's emphasis on original value and expertise.

Example: Steven Bartlett, known for "The Diary of a CEO," employs AI to generate content but ensures his presence through voiceovers and personal storytelling, maintaining authenticity.

Why It Works: Displaying one's face or voice humanizes the content, fostering a stronger connection with the audience and adhering to YouTube's guidelines against faceless, AI-generated content.

Example: Bruno Sartori, a Brazilian comedian, creates satirical content using deepfake technology but ensures all content is well-researched, cited, and structured to provide context and clarity.

Why It Works: Proper citation and structured presentation not only enhance credibility but also comply with YouTube's policies on transparency and originality.

Knolli helps creators avoid "AI slop" and stay monetized by:

See More: Top AI Tools Every Content Creator Must Use in 2025

No. You can use AI tools if you add personal insight, voice, or visuals.

You risk content removal or a strike. Always disclose when using realistic AI content.

Yes. Embed your Knolli copilot in the video description or comment section.

Yes. You can gate AI copilots behind subscriptions, pay-per-chat, or one-time access.